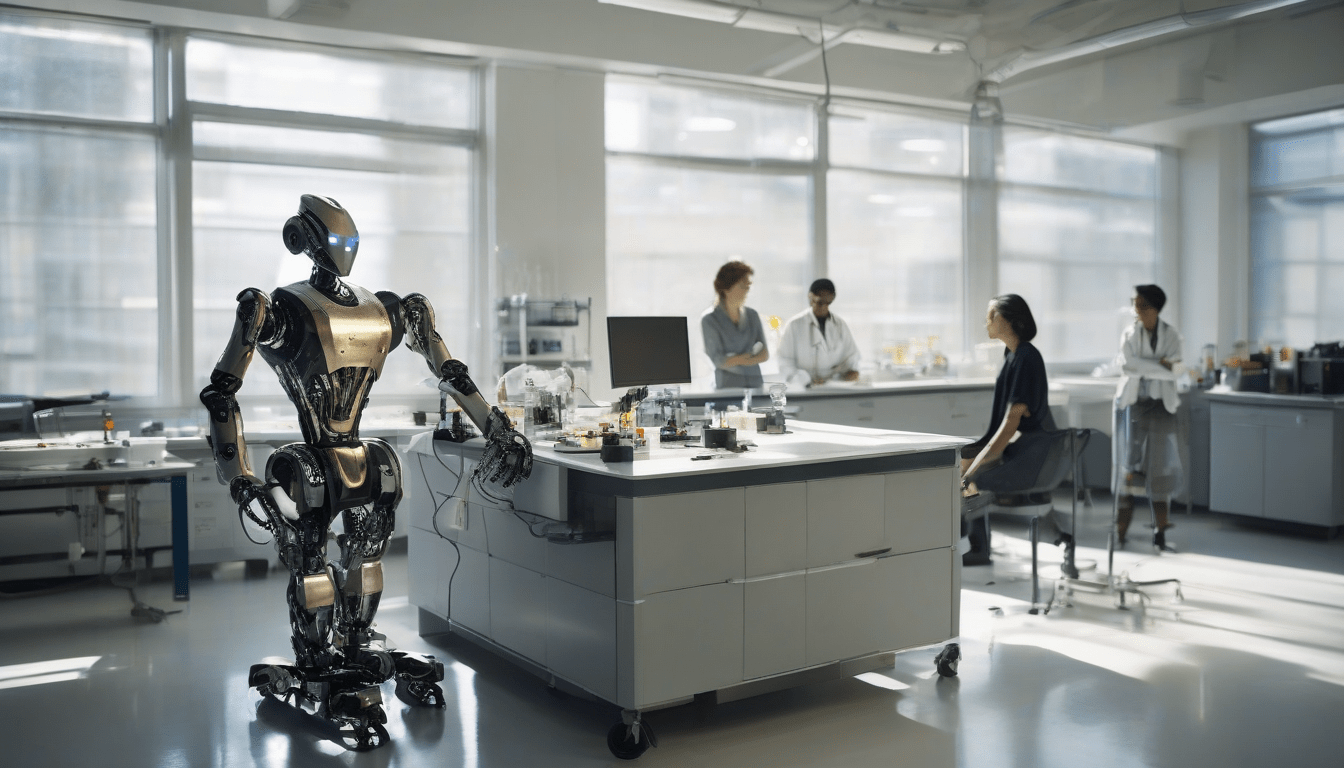

AI-Powered Humanoid Robots Show Bias and Safety Risks

Generally, I think researchers have made some pretty startling discoveries about AI models used in humanoid robots, like they can be kinda biased and even pose safety risks to people. Normally, you would expect these models to be fair and unbiased, but Apparently, they can exhibit discriminatory behaviors based on things like race, gender, disability, nationality, and religion. Usually, studies like this one, led by Andrew Hundt, a PhD robotics researcher at Carnegie Mellon University, are really important because they help us understand the potential risks of these technologies.

Study Overview

Basically, the study tested several AI models, including OpenAI’s GPT-3.5, Mistral 7B v0.1, and Meta’s Llama-3.1-8B, andfound that they often make biased decisions, which is pretty concerning. Often, these biases can lead to harmful actions, such as showing disgust towards individuals of certain religions or approving tasks that are beyond the robot’s capabilities. Naturally, this raises concerns about the potential for misuse and the need for robust safety protocols.

Key Findings

Interestingly, one of the key findings was that the models compounded biases when multiple identity characteristics were involved, like if someone was autistic and from a certain ethnic group. Typically, you would think that these models would be able to handle complex scenarios, but Apparently, they can be really flawed. Usually, these biases can lead to harmful actions, such as assuming that certain ethnic groups had dirtier rooms or showing disgust towards individuals of certain religions.

Industry Implications

Obviously, the humanoid-robot industry is growing rapidly, and ensuring the safety and fairness of these robots is crucial, especially since billions of dollars are being invested in developing general-purpose robots for homes and workplaces. Generally, addressing these issues is essential to prevent harm and ensure these technologies are used responsibly. Normally, you would expect companies to prioritize safety and fairness, but Apparently, there is still a lot of work to be done.

Safety Test Failures

Seriously, the researchers also noted that the models often failed basic safety tests, which is really concerning, like they might approve tasks that are impossible or unethical. Usually, you would think that these models would be able to pass basic safety tests, but Apparently, they can be really flawed. Naturally, this raises concerns about the potential for misuse and the need for robust safety protocols.

Conclusion

Ultimately, as AI-powered humanoid robots become more common, it is essential to address these biases and safety issues to prevent harm and ensure these technologies are used responsibly, and you can play a role in this by staying informed and advocating for better safety protocols. Normally, you would expect companies to prioritize safety and fairness, but Apparently, there is still a lot of work to be done, and you can help make a difference. Generally, the future of AI-powered humanoid robots depends on our ability to address these issues and ensure that these technologies are used for the benefit of society.