Scaling Agentic AI Requires New Memory Solutions

Generally, You Need To Be aware that agentic AI is changing the game, moving beyond simple chatbots to systems that can handle complex workflows. Usually, This means that these advanced models need more memory, but current memory architectures are not up to the task. Obviously, The limitations of current memory architectures are a significant obstacle to the growth of agentic AI. Essentially, You have to consider the cost of maintaining historical data, which is escalating faster than the ability to process it.

Agentic AI and Memory Challenges

Normally, Organizations deploying these systems face a dilemma, storing inference context in expensive, high-bandwidth GPU memory or using slower, general-purpose storage. Apparently, The former is cost-prohibitive for extensive contexts, while the latter introduces delays that hinder real-time interactions. Clearly, You need to find a balance between cost and performance. Usually, This requires a new approach to memory storage, one that is specifically designed for the needs of agentic AI.

Scaling Context and Cost

Basically, As foundation models expand to trillions of parameters and context windows extend to millions of tokens, the cost of maintaining historical data is becoming a major issue. Obviously, You need to consider the trade-offs between different types of memory, including GPU memory, general-purpose storage, and specialized memory tiers. Generally, The goal is to find a solution that provides the right balance of cost, performance, and efficiency. Essentially, You have to evaluate the different options and choose the one that best meets your needs.

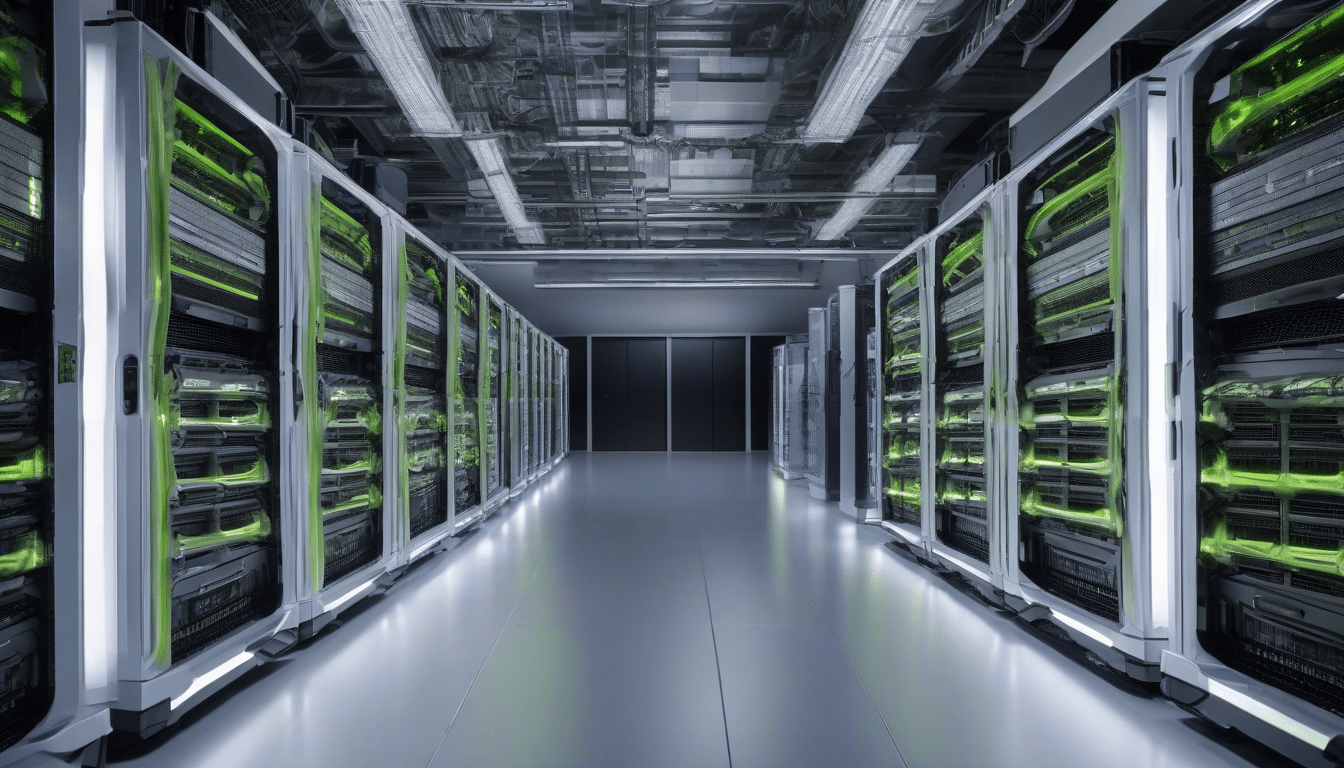

NVIDIA’s Inference Context Memory Storage (ICMS)

Apparently, NVIDIA has unveiled the Inference Context Memory Storage (ICMS) platform, which is specifically designed to handle the transient and high-speed nature of AI memory. Normally, This new storage tier is designed to provide a cost-effective and efficient solution for agentic AI workloads. Usually, The ICMS platform uses a combination of hardware and software to optimize memory storage and retrieval. Clearly, You need to understand how the ICMS platform works and how it can benefit your organization.

Why the KV Cache Matters

Generally, The core challenge lies in the behavior of transformer-based models, which store previous states in the Key-Value (KV) cache. Obviously, This cache serves as persistent memory across tools and sessions, growing linearly with the length of the sequence. Essentially, You need to understand the importance of the KV cache and how it affects the performance of agentic AI workloads. Usually, The KV cache is a critical component of the ICMS platform, and it requires a specialized approach to memory storage.

A Distinct Data Category

Apparently, The KV cache is a distinct data category that requires a different approach to storage and management. Normally, This data is derived from the AI workload and is crucial for immediate performance, but it does not require the durability guarantees of enterprise file systems. Clearly, You need to recognize the KV cache as a unique data type and treat it accordingly. Usually, This requires a specialized storage solution that is designed specifically for the needs of agentic AI workloads.

The New Memory Tier: G3.5

Basically, The ICMS platform introduces a new memory tier called “G3.5,” an Ethernet-attached flash layer designed for gigascale inference. Obviously, This approach integrates storage directly into the compute pod, using the NVIDIA BlueField-4 data processor to offload context data management from the host CPU. Generally, The system provides petabytes of shared capacity per pod, allowing agents to retain vast amounts of history without occupying expensive HBM. Essentially, You need to understand how the G3.5 memory tier works and how it can benefit your organization.

Operational Benefits

Normally, By keeping relevant context in this intermediate tier, the system can prestage memory back to the GPU before it’s needed. Apparently, This reduces GPU idle time, enabling up to five times higher tokens-per-second for long-context workloads. Usually, The architecture delivers five times better power efficiency than traditional methods by eliminating the overhead of general-purpose storage protocols. Clearly, You need to evaluate the operational benefits of the ICMS platform and determine how it can improve the performance and efficiency of your agentic AI workloads.

Networking and Orchestration

Generally, Implementing this architecture requires a shift in how IT teams view storage networking. Obviously, The ICMS platform relies on NVIDIA Spectrum-X Ethernet to provide high-bandwidth, low-jitter connectivity, treating flash storage almost as local memory. Essentially, Frameworks like NVIDIA Dynamo and the Inference Transfer Library (NIXL) manage the movement of KV blocks between tiers, ensuring context is loaded into GPU or host memory when needed. Usually, You need to understand the networking and orchestration requirements of the ICMS platform and how they can be implemented in your organization.

Impact on Data Center Design

Apparently, Adopting a dedicated context memory tier influences capacity planning and data-center design. Normally, CIOs must recognize the KV cache as a unique data type—ephemeral but latency-sensitive—distinct from durable and cold compliance data. Generally, By fitting more usable capacity into the same rack footprint, organizations can extend the life of existing facilities, though increased compute density demands adequate cooling and power distribution. Essentially, You need to evaluate the impact of the ICMS platform on your data center design and determine how it can be implemented to improve efficiency and performance.

Top-of-Rack Placement

Usually, The transition to agentic AI forces a physical reconfiguration of the data center. Obviously, The prevailing model of separating compute from slow, persistent storage is incompatible with the real-time retrieval needs of agents with vast memories. Normally, By introducing a specialized context tier, enterprises can decouple model memory growth from GPU HBM costs, allowing multiple agents to share a massive low-power memory pool. Apparently, You need to understand the implications of top-of-rack placement and how it can be implemented to improve the performance and efficiency of your agentic AI workloads.

Future Outlook

Generally, Major storage vendors are aligning with this architecture, and solutions built with BlueField-4 are expected to be available in the second half of the year. Obviously, As organizations plan their next cycle of infrastructure investment, evaluating memory-hierarchy efficiency will be as vital as selecting the GPU itself. Essentially, The development of new memory architecture is essential for scaling agentic AI, and NVIDIA’s ICMS platform offers a promising solution to the memory storage bottleneck—delivering significant benefits in efficiency, cost, and performance. Usually, You need to stay up-to-date with the latest developments in agentic AI and memory storage to ensure that your organization remains competitive.